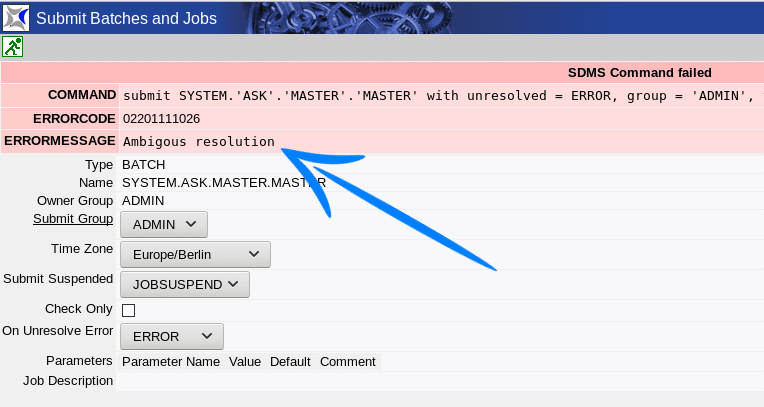

Under certain circumstances, the submission of a batch or job to BICsuite or schedulix is prevented with the error message “Ambigous resolution”. In this article we will show you how this error message can occur and how you can define dependencies so that they can be clearly and unambiguously processed in your Workload Automation System.

Example 1

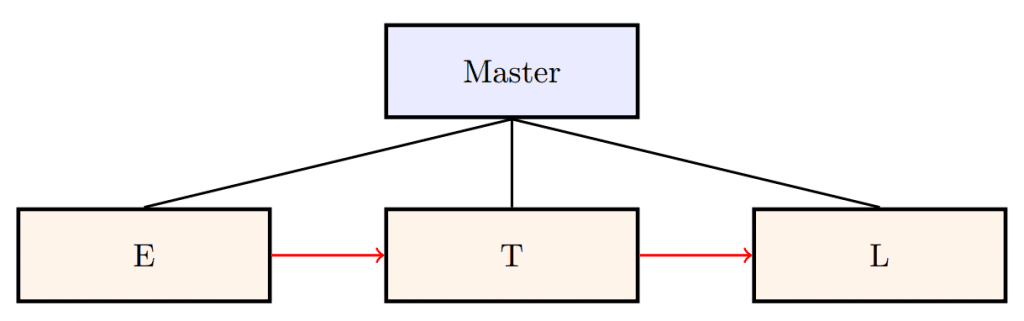

Let’s start with a very simple example. Someone wants to do some processing on a database table. Data needs to be extracted, transformed and then loaded into some other system. It is not that important to know

what is actually done, it is only important to understand the role of the jobs involved.

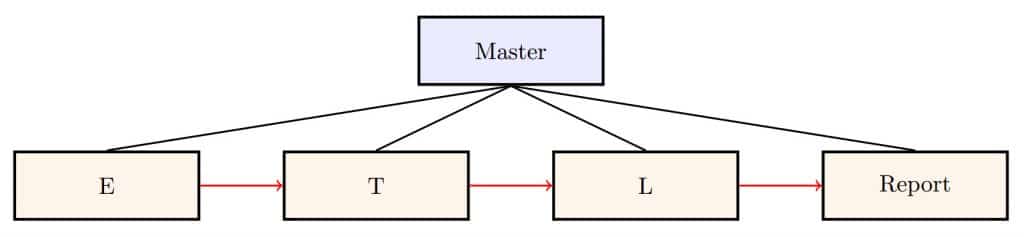

Since there are three steps to perform, three jobs are created. Let us call them E, T and L. Obviously there will be some dependencies between the jobs. It doesn’t make sense to try to load data before it is even extracted, and loading raw data will fail as well.

So we define a job flow like this:

In this picture we’ve defined batches in blue and jobs in orange. Dependencies are represented with red arrows. Parent-Child relationships are represented with black lines, where the parents are at the top, the children are at the bottom.

The textual definition basically looks like this:

create job definition system.e

with

type = job,

…;

create job definition system.t

with

type = job,

required = (system.e),

…;

create job definition system.l

with

type = job,

required = (system.t),

…;

create job definition system.master

with

type = batch,

children = (

system.e,

system.t,

system.l

),

…;

As one can see from the definition above, dependencies reference job definitions, but not jobs. This is not a problem because at submit time it is perfectly clear which jobs are predecessors and which jobs are the

successors.

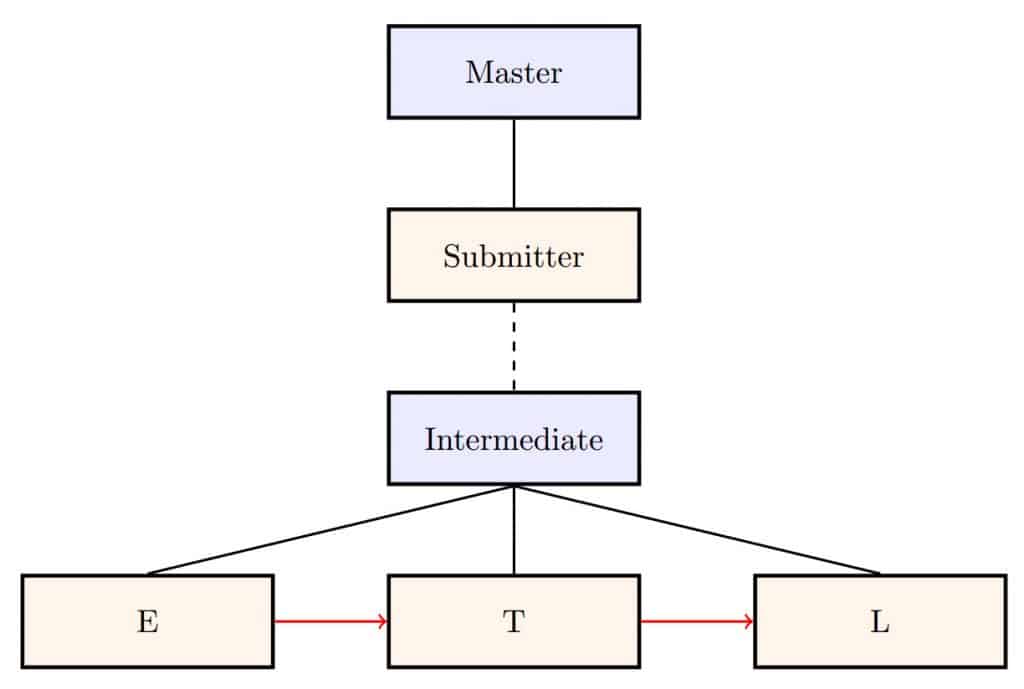

After some time it turns out that the performance of the job flow is not ideal. But fortunately the origin database table turns out to be partitioned and the processing can be done partition-wise. Clearly some changes have to be made to the original job flow.

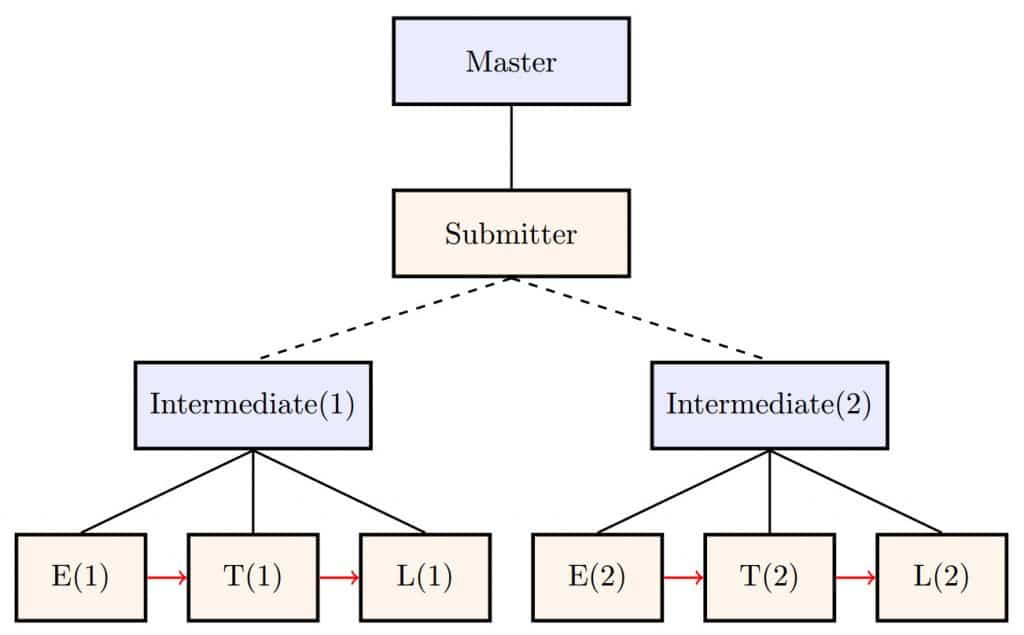

The jobs E, T and L need to be children of some intermediate batch and there is a need for a job that determines the number of partitions of the origin table and starts one instance of the intermediate batch for each partition.

The dashed parent-child connection between Submitter and Intermediate indicates that Intermediate is a dynamic child of its parent. The effect is that an instance of the child won’t be created at submit time, but can be created by the parent at runtime.

If we look at the commands that would create this structure, we see that there’s not that much difference between the initial implementation and the extended one. In fact, what used to be the batch Master is now called Intermediate and two more hierarchy levels are added.

create job definition system.e

with

type = job,

...;

create job definition system.t

with

type = job,

required = (system.e),

...;

create job definition system.l

with

type = job,

required = (system.t),

...;

create job definition system.intermediate

with

type = batch,

children = (

system.e,

system.t,

system.l

),

...;

create job definition system.submitter

with

type = job,

children = (intermediate dynamic),

...;

create job definition system.master

with

type = batch,

children = (submitter),

...;

As in real life, parents can even create multiple children at runtime. The number of children that will be created is not visible in the definition of the job flow. This is analogue to a loop in some programming languages. Within the program it isn’t necessarily obvious how often the loop body will be executed. The loop body itself doesn’t have a notion of how many invocations were before it and how many will follow.

The picture below shows the job flow with two dynamic children (adding more won’t make the example more understandable and won’t add information).

Hence what we see is the hierarchy and dependency graph of the job flow at runtime. Although the definition didn’t specify the exact dependencies between the jobs, the system manages to create the correct and intended dependencies. It wouldn’t make sense if T(1) were to wait for E(2) to finish. And since the partitions can be processed independently, it wouldn’t make sense if T(2) were to wait for E(1) either.

What happens under the hood is that the system dynamically searches ”the best matching job” if it encounters a dependency definition while submitting a (partial) tree. And the best matching job is defined to be the nearest instance of the job definition that is being searched for. In the example above, it is obvious that the distance between T(1) and E(2) is greater than the distance between T(1) and E(1). And this is why there’ll be a dependency instance created between the latter two.

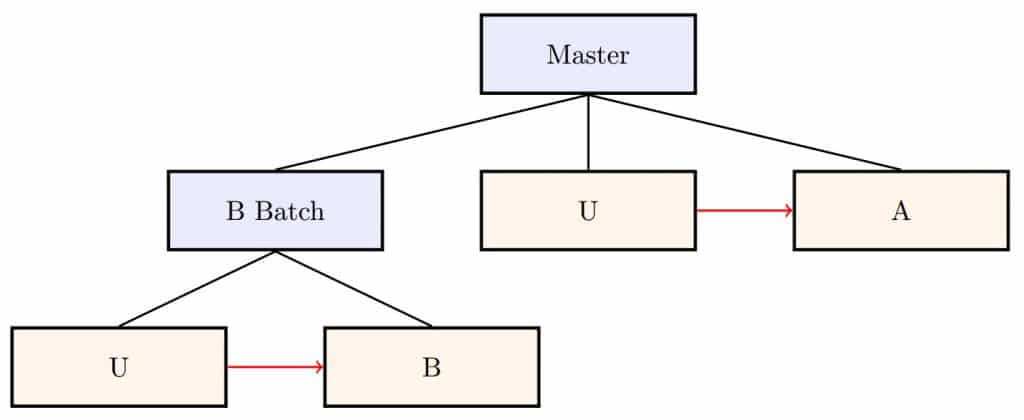

Let’s go back to the initial implementation of the job flow and assume that a report has to be created. Obviously this will have to happen after the data load has finished successfully. The picture now looks like this:

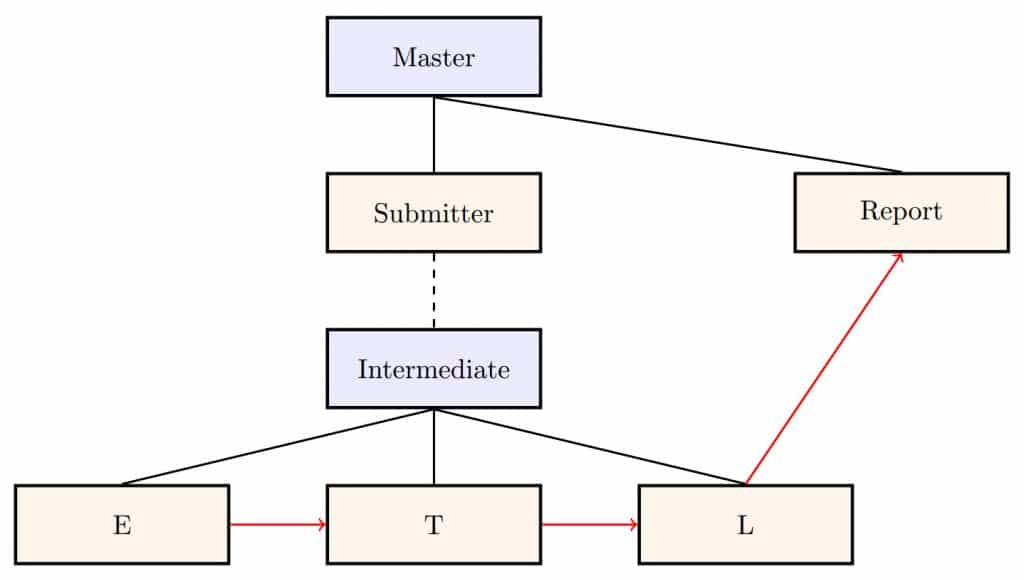

Nothing special about this. But if we now add the dynamic submit functionality to enhance the performance by processing the table partition-wise, we’ll have to be careful. We can’t just keep the dependency between L and Report as it is. Within the definition it would still look reasonable

and in fact it is possible to define the job flow like this. But what immediately catches the eye is that the dependency is a cross-hierarchy dependency. Jobs at different hierarchy levels seem to be connected.

In programming, such structures often indicate design flaws. And if a result that is produced several levels deeper is relevant for the next step, the correct way to handle this is to transport this result to the upper level.

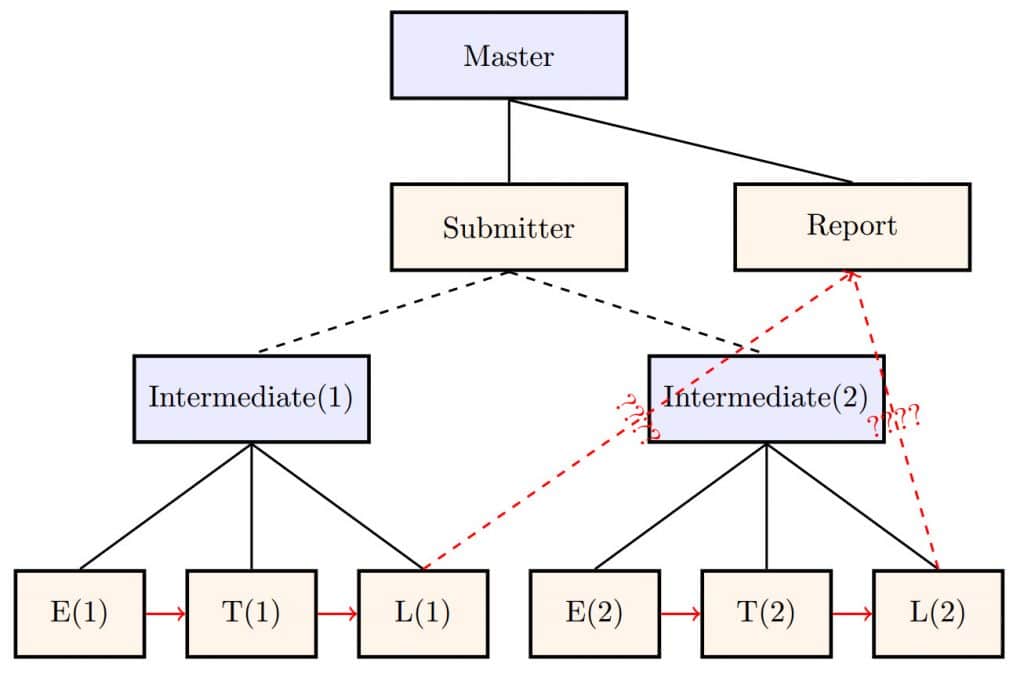

It would even be possible to submit and execute this flow (the dependency must be defined with unresolved mode defer in this case) as long as there’s only a single partition to process! As soon as the Submitter tries to submit a second instance of the Intermediate batch, things get confusing because all of a sudden it isn’t obvious any more which instances of L the Report should wait for.

Both instances of L have the same distance to Report and the system will report an ambiguous resolution. In the picture, the problematic dependencies are represented by the dashed red lines and marked with question marks.

In this example the problem can be fixed easily. Obviously the report will have to wait for all L jobs to complete successfully. In fact it has to wait for the Submitter Job and all its children to complete. Instead of creating a dependency between L and Report, a dependency is created between Submitter and Report.

Example 2

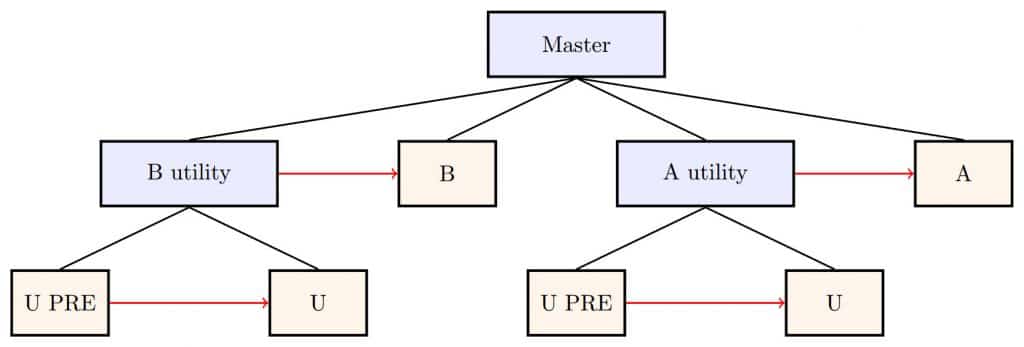

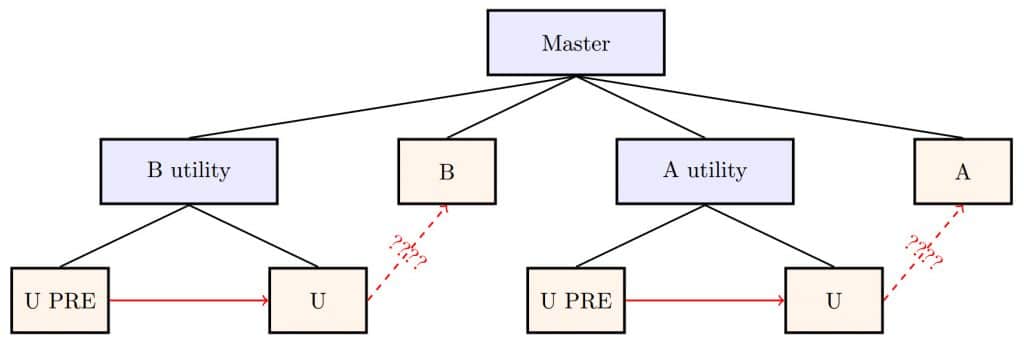

It would be a mistake to believe that an ambiguous dependency resolution always requires jobs that are instantiated at runtime (using dynamic submits or triggers). It is easily possible to create problems in other ways as well. As an example, let’s assume there are two jobs, A and B, and a generic utility U. Both A and B require this utility. The job flow (that has evolved historically) looks like this:

And this will indeed work as designed. There’s no problem with this definition. Both A and B will find the correct and intended (nearest) instance of U. Now imagine that the utility U needs a predecessor, perhaps for a kind of cleanup or other preparations.

Someone adds that job and reshapes the job flow in order to make it “beautiful”:

But this does not work! There’s no way to tell either A or B which of the instances of U they require. Within a parent, how the children are sorted is not defined. Often the children of a parent are displayed in alphabetical order. But that only makes it easier to find a specific child and has no other meaning.

From the perspective of the parent-child graph, the distance between A and A utility is exactly the same as the distance between A and B utility and therefore there’s no difference between the distances of A and each of the instances of U.

When trying to submit this job flow, the system will refuse to do so and issue an error message that indicates an ambiguous dependency resolution.

Again this can be resolved by creating a dependency between B utility and B respectively A utility and A.